Define crisp goals with a measurable plan, then align stakeholders early. This foundation makes findings and decisions visible and keeps discussions focused on observable data rather than opinions. Treat the plan as a living contract that guides iterations and supports a quick return on investment.

Examine user tasks through a mix of approaches to reveal actual behavior. Gather insights remotely via moderated sessions and lightweight unmoderated tasks, complemented by brief on-site checks when possible. Knowing where users stumble helps teams target changes, and the combination of methods increases the reliability of findings.

Capture detail in every issue: context, exact action, obstacle, and outcome, then translate findings into an implementation plan. Analyzed data should drive which items to fix first, with scores and a value estimate. This approach keeps decisions consistently aligned with goals.

Build a practical implementation backlog: assign owners, set milestones, and maintain a living sheet that tracks progress and impact. Present a concise return expectation and the value each change offers to secure support from leadership and product teams.

Maintain ongoing discussions with product, design, and engineering, posting digest-level summaries to keep everything visible. Beyond the obvious issues, capture hidden friction points and tie updates to goals. Remote updates tied to goals ensure the team stays aligned, while the documentation of examined issues remains analyzed and traceable for future decisions.

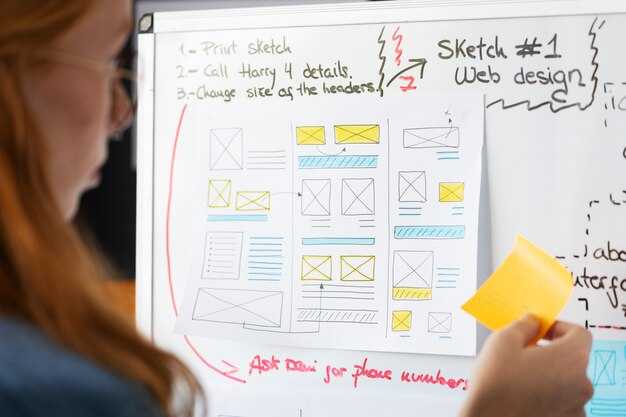

UX Audit Plan and Guide

Record three core customer journeys across commerce sites, map issues to a 0–5 severity, and prioritize fixes that close the biggest funnel gaps this week. This approach is step-by-step and focuses the team on what actually moves conversions.

Scope alignment: pick checkout, search, and product detail paths; whether desktop or mobile, sessions are carried out. Use manual note taking and record outcomes in a shared sheet backed by analytics on page loads and drop-offs. Use barvy a aesthetic cues to flag design debt.

Session design: run two 45-minute rounds per path with a facilitator, a customer surrogate, and an observer. Use a manual checklist, prompt questions, and live color contrast checks. Capture fixes and pin down exact UI elements the changes should touch, covering everything that matters in the path.

Findings: describe issues with evidence: screenshots, URLs, and timestamped notes. Distill into practices to repeat on other sites, not just one page. Attach a brief impact estimate and a proposed lever to trigger the fix, such as a CSS tweak, content rewrite, or navigation tweak.

Prioritization: build a backlog with items labeled by impact and effort. Use a simple matrix to decide whether to fix immediately or defer, and record dependencies. Instead of chasing cosmetic changes, the most effective fixes are those that reduce cognitive load and improve checkout clarity.

Delivery: produce a compact report with executive summary, top 5 issues, rationale, and a step-by-step plan for the engineering team. Include a change log and a kickoff session for validation with stakeholders to ensure alignment with business goals.

Next iterations: schedule a follow-up session after releases, measure KPIs again, and adjust the plan. Always anchor the work in customer outcomes and cross-check with analytics, support tickets, and commerce metrics to confirm the customer benefits, and add a dose of qualitative feedback from live sessions to be sure the changes land as intended.

Process consistency: standardize how findings are recorded, how visuals are described, and how barvy are evaluated across sites. This ensures Hotovo. work across teams and sites, with clear ownership for each practice.

Step 1: Define scope, goals, and UX questions aligned with business metrics

Preparation sets the foundation: map business metrics to user outcomes and lock them in a single, observable plan. Define the audience, channels, and core task flows you will review. Collect evidence using hubspots and CRM exports to ground decisions in real data; document assumptions, risks, and alignment plans.

Set clear goals tied to outcomes such as conversion, activation, retention, and revenue. Attach a baseline and a target, and establish a simple reporting cadence. This focus helps teams decide where to invest, and makes it fine to adjust scope if evidence shows trade-offs. Usually, this yields stronger prioritization.

Develop UX questions that drive action. For each goal, ask which task or scenario moves the metric, what constitutes success, and where users meet friction. Include context and messaging questions: does the path reduce cognitive load, is information easy to locate, are errors recoverable? Prioritize questions that yield concrete improvements.

Data and evidence plan combines tech-based analytics with manual observations. For early validation, collect collecting average task time, completion rate, error rate, and path efficiency, plus attitudinal signals via short surveys. Store findings in a single plans doc and link each item to a concrete action.

Context and messaging alignment ensures questions reflect the user’s digital context and how messaging shapes behavior. Contrast different flows to see which deliver stronger outcomes; focus on where changes have the largest impact on retention and experiences.

Competitor and external signals: review competitor patterns and news to calibrate expectations without copying them. Use Wolfe-style critique to test assumptions against reality; gather external evidence but rely on internal constraints. Decide how much weight external signals receive in the final scope and how they inform the plans.

Deliverables and next steps: finalize a scope document, a crisp set of goals with metrics, and a prioritized UX-question list. They should appoint owners, set a lightweight timeline, and establish a collecting plan for early validation. This creates a clear path for the project and keeps momentum through the next phase.

Step 2: Audit data collection in traditional analytics (tracking setup, data quality, event taxonomy)

Recommendation: Map every tag to a single objective and validate dataflow to ensure accurate, fast collection with minimal load on pages, while aligning with stated objectives and ensuring information is informative for them and the brand team. Make the data map and reports available for download to stakeholders.

Tracking setup

- Inventory all tags on landing pages and along key paths; confirm tags load quickly, avoid duplicates, and aren’t blocked by consent banners or ad blockers; verify that events fire on the expected interactions across devices.

- Ensure a single source of truth in the tag management system; standardize triggers so the same logic applies on desktop and mobile; implement fallback rules for users with limited connectivity.

- Validate data flow across platforms (e.g., analytics suites and data layers) so the same events map to the same properties; document the flow within auditing records and keep it accessible to the team.

Data quality

- Define a data-quality baseline with criteria for completeness, accuracy, and consistency; set a range of acceptable thresholds for missing values, timestamp skew, and outliers; track these in a living evaluation document.

- Align time zones and currency conventions across sources; enforce a standard (e.g., UTC) and note any on-site conversions that occur before reporting.

- Identify data-loss risks (consent changes, script failures, network drops) and record mitigations; monitor loss rate to prevent losing valuable signals.

Event taxonomy

- Develop a consolidated taxonomy that maps events to objectives; include a range of signals such as landing_page_view, click, form_submit, add_to_cart, checkout_initiated, and purchase; use a consistent naming convention (lower_snake_case) and attach core properties (page, location, value, currency).

- Specify mandatory versus optional properties for each event; document ownership to ensure accountability and quick triage when issues arise.

- Review legacy or redundant events and retire them within a controlled rollout; ensure naming supports quick recognition by reader groups and aligns with brand guidelines.

Deliverables and governance

- Produce a concise data map and a downloadable checklist that the team can reuse; share with stakeholders to keep them informed and engaged.

- Assign ownership for each data source and event type; establish a regular cadence for reviews and a fast path for prioritizing issues discovered in the evaluation.

- Involve shaan, a product owner from the branding group, to reveal issues quickly during reviews and to help prioritize fixes based on impact and reach.

Key outcomes and reader-oriented notes

- Accurate tracking coverage: landing pages and critical funnels are fully represented in reports, with no unexplained data gaps.

- Solid data quality: completeness and consistency are demonstrated across sources, enabling reliable comparisons for the team and executives.

- Valuable signals: the event taxonomy identifies high-impact interactions and supports actionable recommendations for optimization.

Guidance for teams and groups

- Provide informative updates to reader groups; ensure the download is accessible and the reports load quickly for smaller teams and larger departments alike.

- Maintain user-friendly documentation that explains the tracking setup, data-quality rules, and taxonomy in plain terms.

- Document learnings and keep a living record to reveal issues early and drive continuous improvements within auditing workflows.

Step 3: Analyze funnels and user flows to uncover drop-offs with concrete metrics

Export funnel data for the current period, identifying the top 3 drop-off steps and setting a measurable target to reduce leakage by 15% within 4 weeks. Drop-off rates: sign-up completion 40%, checkout 28%, onboarding 18%.

Knowing user goals and blockers, explain root causes via interviews and observing sessions, highlighting behaviors at each step. This additional context supports a solid case for changes and inclusivity considerations in the experience. Ensure the plan is aligned with user needs and business aims, and keep the team sure of what to fix first.

Depth comes from a combined view: funnel analytics, path tracing, and task-level testing. Start with early hypotheses, backed by interviews and data, then scale with rapid trials and scaling changes across cohorts. Align plans with objectives to achieve measurable improvements in the flow.

Case: Matt’s onboarding shows a common pattern: initial screens are clear, friction arises at the first tutorial. Results are backed by data and interviews; targeted messaging adjustments reduce drop-offs and align behavior with product goals.

Observing user interactions across devices helps ensure inclusivity. Observing edge cases, you should consider accessibility, language clarity, and form labels. Gather feedback from diverse users to improve flow and reduce churn.

| Area | Metric | Current | Cílová stránka | Data Source | Actions |

|---|---|---|---|---|---|

| Sign-up | Drop-off rate | 40% | 32% | Analytics events | UI simplifications, clearer copy |

| Checkout | Drop-off rate | 28% | 20% | Event logs | Streamline steps, inline validation |

| Onboarding | Completion rate | 60% | 75% | Product analytics | Guided tour improvements |

Therefore, implement the plan, track progress, and adjust based on ongoing data collection.

Step 4: Validate findings with quick qualitative checks and direct user feedback signals

Begin with recruitment of a small targeted group of users; youll run 3–5 rapid checks on 4–6 website elements tied to the corresponding findings, and record each reaction as you observe.

During each check, look for gaps where the user path diverges from expectations, note whether confusion arises, and identify whether controls respond as intended. Capture signals concisely to form a quick evaluation of potential issues.

Document observations with a concise record that links each finding to the corresponding page or element, the observed behavior, and a suggested remedy. The note set includes: what was expected, what actually happened, and the potential impact on task completion.

Use direct feedback signals: short prompts after tasks, brief thumbs or quotes from testers; this provides a well-rounded set of cues. This improves the signal-to-noise ratio and helps you come to a concise conclusion without overburdening the medium of your tests.

Pair qualitative signals with a lightweight analysis: map observations to a small set of themes, verify them against the assessments, and note any mismatches with the website’s elements. This together strengthens the core path of your evaluation and informs next steps.

Keep it pragmatic: avoid a single data point; use a medium mix of observations and direct feedback to confirm or challenge the existing analysis. If gaps appear, plan targeted changes and re-run checks on the same path.

Together, this loop–observe, record, assess, and re-check–delivers concise, evidence-backed findings that drive immediate improvements on the website.

Step 5: Draft actionable recommendations and prioritize by impact and effort

Create a one-page action plan that links each finding to a high-impact, low-effort change and assigns a specific owner for the tour of outcomes.

Build a checklist of recommended changes, with items tagged by impact and effort. Separate into quick wins (low effort, high effect), mid-term bets, and long-run investments, and record needed assets such as visuals, copy, and code changes, to enable fast execution.

Prioritize this using a two-axis view that measures impact in terms of clicks saved, time on task reduced, or satisfaction shift, and effort in design, development, and QA. For each item, specify který user task it improves and který system area or platforms it touches, then group them into multiple groups and apply zone-based segmentation to maintain alignment across devices.

Evaluate feasibility with a costs estimate and the needed tech stack, and set a range for execution time. Include dependencies, risk flags, and trade-offs to help vedoucí assess scope and maintain a consistent narrative.

Attach notes from gathered analytics, user feedback, and expert judgment. For each item, attach a view of expected outcomes and a range of success. This approach helps ustavit credibility and keeps the narrative grounded in real data.

Define success criteria: reduce unnecessary clicks, improve navigation feel, and elevate aesthetic consistency across platforms. For each outcome, specify a measurable close threshold (e.g., 15% fewer clicks, 20% faster task completion, 0.8+ satisfaction). Use a satisfaction score as a KPI and track it with quarterly reviews.

Assign owners to groups: design, product, and tech leads; set a zone-based cadence for reviews and updates. Ensure the plan is consistent across teams and that progress is tracked in a central checklist to avoid drift.

Present the final view to stakeholders with a clear turning point: what changes will ship in the near term, what remains in backlog, and how the changes will alter the view, feel, and overall experience of the product.

Next actions: gather the needed inputs, close gaps on uncertainties, revise estimates, and lock the plan with leadership; this will ustavit momentum for the upcoming release cycle.

Jak provést úspěšnou UX auditaci v 6 krocích – Praktická příručka použitelnosti">

Jak provést úspěšnou UX auditaci v 6 krocích – Praktická příručka použitelnosti">