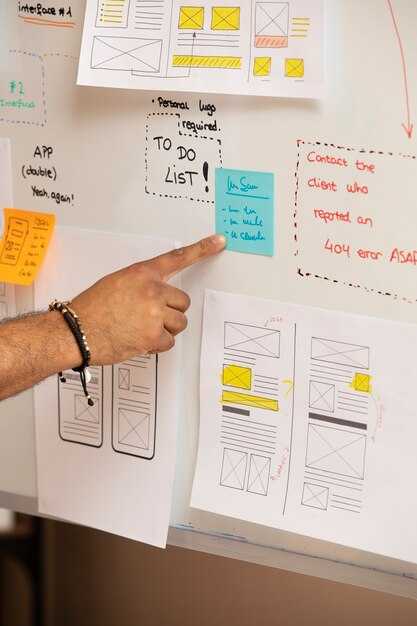

Begin with a one-page brief that orients toward the audience; defines success metrics; maps the action plan. This brief informs design choices; provides a clear path ahead; aims to inform decision-makers.

Adopt a high-level framework tying user needs to business value; a thoroughly outlined plan maps research, insights, designs; a focus on building shared understanding.

Establish a learning loop that cycles discovery; validation; iteration; this advances science of design; yields practical tactics for faster learning; signals inform backlog prioritization.

Tips for audience alignment include rapid usability checks; lightweight prototypes; clear success signals; momentum stays ahead.

Living roadmaps guide building experiences across touchpoints; this approach addresses context diversity; clarifies designs; strengthens understanding.

More momentum arises when research remains action-driven; this lifts outcomes higher toward value for users.

Practical UX Strategy for Language Learning: Lessons from Duolingo’s Gamified Approach

Launch a compact daily loop: 5-minute sessions that deliver tangible progress within days, then ramp up complexity as users’ experiences mature, building momentum year after year.

Use a thoughtful, easy-to-use core pattern built on feedback loops, visible progress; examples include XP milestones, daily streaks, crowns for mastery, bite-sized lessons that are completed effortlessly. Looking for quick wins, guests stay motivated; this structure delivers momentum, offering clear next steps.

implementing this approach requires a cross-functional squad; instrumented analytics; rapid testing. Within 4 to 6 weeks, deploy a minimal gamified module; researchers provide qualitative feedback, learners provide input as well; then iterate. Over years of practice, researchers notice preferences vary by language family; accommodate that with flexible paths. Track metrics such as completion rate, session length, daily active users, retention curves; use that data to refine levels, rewards, pacing.

thats why teams align product, design, plus content around learning needs; this alignment drives steady engagement; driving results for guests, educators alike. Learners report that the experience is motivating, feedback loops support ongoing growth; this approach delivers tangible outcomes that feel within reach for beginners.

Looking at numbers, sessions rise when prompts feel relevant; really relevant content aligns with learner needs. implementing a lightweight research plan, combining qualitative interviews with A/B tests, yields answers that feed design decisions. The result delivers outcomes that guests trust, fueling long-term engagement, especially when researchers provide insights.

Define scope, goals, and measurable outcomes for the UX strategy

Set a tight scope: three core areas–onboarding, product search, checkout–within a 90-day cycle. This involves a designer, product, tech teams; decisions are data-driven, which keeps focus on user value. Focus on right value; target friction reduction to save time; steps are actionable; just enough changes to move metrics. Keep the situation simple; eliminate redundant steps; real-time feedback keeps momentum going; backlogs are shortened; the purchase flow becomes smoother; the same metrics apply across funnels; results made visible to stakeholders. Each decision relies on data.

Translate scope into measurable outcomes: three to five targets: purchase rate, onboarding completion, checkout drop-off, revenue per user. Each metric has a baseline, a target, a timeline. The value provides a clear decision path; leaders can think in terms of revenue impact; real-time dashboards surface traffic signals; the plan remains actionable; well-defined; directly tied to revenue. Execution is well guided by this plan.

Measurement blueprint: data sources, baselines, cadences; run experiments to prove impact; keep alignment with revenue goals. Real-time dashboards provide traffic signals; when a metric dips, the team pivots; eliminate waste; the approach places UX at the forefront, backfilling gaps via members across product, design, tech. Follow this framework to guarantee each release is complete, able to move revenue, user satisfaction forward.

Translate user research into actionable requirements for a gamified learner experience

Begin with a requirements ledger that translates research findings into concrete, shippable items for a gamified learner experience; for each insight, generate a compact user story with acceptance criteria, assign categories, classify priority; map fulfillment milestones. This approach yields valuable recommendations, shows them how to implement faster improvement cycles efficiently, ensuring behaviors align with learning goals. Such practices started youre team toward a complete version, which delivers usable feedback for rapid refinement.

Link each requirement to observable behaviors learners exhibit during practice, quests, drills; capture signals such as time on task, repetition rate, drop-offs, collaboration patterns. Define categories to cover motivation, feedback, progression, social learning, plus reflection; each category receives a dedicated metrics suite. Track only critical signals initially.

Create a traceability backbone that maps research insights to user stories, priority, feasibility; attach acceptance criteria per item; include success metrics such as completion rate, time to value, usability score. Use this framework to guide your team toward a complete roadmap, ensuring everything ties back to learner outcomes.

Implement a versioning plan that starts with a minimal offering; pilot with a sample cohort, collect data, iterate; this version delivers measurable improvement, faster validation, clearer signals for scale. Coordinate with marketing to align messaging; take feedback from learners, marketing stakeholders, product owners to refine the offering.

To scale, codify patterns into templates that fits multiple contexts; begin with a skeleton of templates for onboarding, practice, quests, plus an offering for assessments. Use a variety of learning styles; ensure each template supports faster adoption, clearer metrics, plus repeatability. Take every study result, translate into a reusable pattern, implement across teams with a single version of truth. Everything delivers value; as you iterate, reusing patterns across categories reduces risk, speeds release cycles. This approach ensures the solution fits diverse contexts.

Duolingo-inspired design blueprint: XP, streaks, levels, practice queues, and feedback loops

weve structured a compact XP engine that assigns points for each action: completing a lesson; practicing; reaching daily goals; maintaining a streak. This boosts motivation by signaling progress; provides a clear measure of effort. Use a transparent, standard scale; start with a small amount per action; increase via multipliers for consistent activity; cap escalation to keep current pacing comfortable; this setup takes little time to adopt.

Outlined blueprint guides planning efforts, enabling faster execution within the industry’s speed of change.

Design aims: strengthen relationships with learners; deliver trust in the system; support sustainable behavior. The XP framework connects with current marketing signals; it works when users gain understanding of how each action affects their trajectory; a trusty, powerful UX reinforces reliance on the platform.

Boosting retention becomes a natural outcome of practice signals.

For teams facing churn, this blueprint delivers concrete moves still keeping processes lean; a clear link to traffic, value.

Practice queues are prioritized by proficiency gaps; queue length 5–7 items; pacing aligned with daily goals; automated rotation keeps topics fresh; users can customize focus topics; queue updates each session to maintain relevance.

Feedback loops deliver micro-responses after each action: a visual cue, a brief tip, a reflective prompt. Signals stay timely, actionable, trustworthy; noise is minimized by surfacing relevant information only. A steady rhythm of positive reinforcement boosts momentum.

- Data modeling: XP, streaks, levels; outline current system schema; align with website analytics; map to standard events.

- UI signals: visual progress bars, level‑up banners, queue indicators; maintain accessible contrast; align with responsive designs.

- Practice queue engine: 5–7 items default; rules for rotation; support topic tagging; allow user customization; connect with learning pockets.

- Feedback loops integration: timing windows; micro-interactions; copy guidelines; test in planning sprints; measure comprehension.

- Rollout plan: phased release; monitor metrics; adjust multipliers; scale across bigger user cohorts; ensure marketing collaboration.

Sure, these metrics guide planning; results connect to industry benchmarks, boosting bigger outcomes for marketing, product teams. Weve kept processes lean; making this system trusty, scalable; efficiently tied to traffic signals on the website.

- Retention: 7-, 14-, 30-day cohorts; DAU/MAU ratio; steady growth in sessions.

- Engagement: XP completion rate; queue fulfillment; average session length; topic diversity.

- Purchases: in-app boosts or XP bundles; revenue per user; conversion from free to paid tiers.

- Traffic: referral sources; landing page performance; industry benchmarks; competitive position.

- Understanding: qualitative feedback; quick surveys; perceived ease of use; signal comprehension.

Understanding emerges as users internalize progress signals; measurable comprehension grows with practice.

Balance engagement with learning: flow, cognitive load, and rewards pacing

Begin with a defined flow target: 5–7 minute micro-slices, a personalized dose of challenge, timely feedback; rewards that keep attention without cognitive overload. Nathan, strategist, recommends this approach for online modules; shared plans with professionals; real experience that feels successful.

Flow emerges when task difficulty matches skill; 3 core criteria drive it: goals are clearer; easier adoption across modules; immediate feedback; pacing remains seamless; this makes learning outcomes visible.

Cognitive load management relies on mapping of concepts; break content into chunks; apply progressive disclosure; keep intrinsic load to 60–70% of capacity; minimize extraneous load that distracts; being clearer in transitions reduces confusion; implementing these measures yields predictable outcomes; this focuses on real experience; Following these measures, risk decreases.

Rewards pacing links to milestones within plans; a next milestone dose of recognition keeps motivation steady; milestone reviews occur every 2 modules; for partner teams, avoid costly bursts; emphasize the real experience delivered to learners; this approach delivers highly measurable value for professionals; it stays aligned with real experience; whats next is mapped in following plans for continuity.

Build a lightweight measurement plan: dashboards, experiments, and iteration cadence

Begin with four dashboards mapped to critical experiences across the website; each shows goal progress, conversion rate, task completion, satisfaction signals; metrics align with customer journeys to identify friction points, opportunities for personalisation towards better outcomes that move the needle; experiences made clearer.

Set a 14-day rhythm; run two lightweight experiments per cycle; implement a single change per experiment; measure results within seven days; decide to keep, modify, drop.

Define grain of data; break data into cohorts; track versions; baseline before changes, observe post-change shifts; this approach guides driving growth across the organisation.

Include a dedicated owner for each dashboard; responsibility for data quality, privacy, compliance; provide a versioned plan that communicates what to test; why; expected outcome.

Cadence for iteration: weekly reviews of results; monthly planning checks; quarterly reset of targets; review results regularly; use growth curve insights to steer experiments that drive customer relationships, growing members, enhanced experiences.

Organisation benefits: faster learning loop, lower cost of experimentation, clearer alignment across teams; a lightweight approach keeps experiences made by members aligned with customers, improving regular feedback.

| Métrique | Source de données | Cadence | Owner | Cible | Notes |

|---|---|---|---|---|---|

| Goal attainment rate | Analytics, CRM | Biweekly | Growth Lead | +15 percentage points | Drive learning across organisation; track across cohorts; observe curve of adoption |

| Personalisation CTR | A/B tests | Sprint | Personalisation Lead | +20% | Ensure statistically valid sample; break data by segment |

| CSAT | Post-transaction survey | Mensuel | Experience Manager | 0.9 | Monitor grain of feedback; respond quickly |

| Temps passé sur la tâche | Suivi des événements | Biweekly | Product Analyst | +12% | Identify friction points; breakdown by page |

| Retention curve | Analytique | Mensuel | Customer Growth Lead | +3% | Identify behaviours driving return |

UX Strategy – Qu'est-ce que c'est, pourquoi c'est important et comment la construire">

UX Strategy – Qu'est-ce que c'est, pourquoi c'est important et comment la construire">