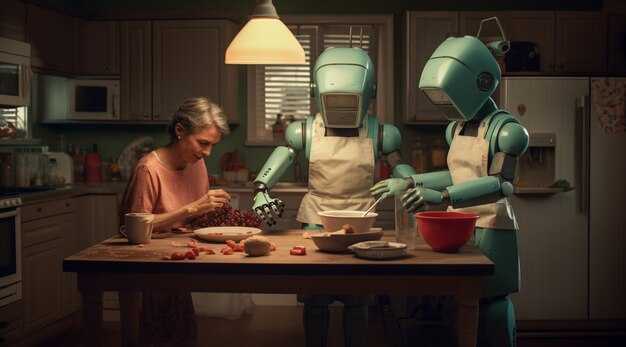

Choosing collaboration over replacement, marketers should deploy AI as a trusted assistant that handles data-heavy tasks while people steer strategy, storytelling, and relationships. choosing where AI brings value matters.

AI handles scheduling, testing, and scaling content, delivering predictable outputs and brings reliability as a guide for planners who set obiettivi and timelines. In recent pilots, teams reported 25-40% faster iteration cycles and a 15-25% increase in successful tests moving from idea to iteration within a week.

Human creativity remains essential: artistry that understands culture and brand meaning; machines accelerate output without fully grasping the questions that matter to their obiettivi, e understanding these nuances matters.

Usa fonte data as a compass, and keep plan aligned with safety and risk controls; the machine can crunch signals, while human teams interpret them and decide what to test next, which matters as a guide to actions.

In practice, the best path blends automation with human judgment. It helps prevent losing momentum, keeps teams focused, and answers the questions that arise as obiettivi evolve. When marketing teams take ownership of creative direction and schedule experiments thoughtfully, machines shrink repetitive work and amplify impact. Begin with a 90-day pilot to evaluate time-to-publish, engagement lift, and cost per lead.

The future of sales isn’t human or AI it’s both says Bryant AI marketing expert Stefanie Boyer

Prioritize a hybrid sales engine: blend human strategist instincts with AI analytics to drive reliable results. This approach brings the best of both worlds: the behind authenticity of messaging from people and the analytic speed to analyze signals, run tests, and optimize campaigns. Prioritize the right signals and keep a clear focus on what matters, with reporting that shows the advantages of each layer.

Whats next for sales? Tie every decision to the customer experience. Using visuals and experiences grounds messaging in reality. A balance workflow reduces burnout by distributing creative tasks and data work; this balance helps everyone stay inspired while staying rigorous. Track issues and iterate quickly with reporting, answering questions and whats next for the pipeline: which channels deliver the best response, and how does the attribution model reflect their contributions.

Practical steps: run short cycles of tests every 1-2 weeks, using live data to validate hypotheses. Build dashboards for analytics and publish a weekly report with 3-5 actionable insights. Analyze the gap between forecast and reality, then adjust budgets, creative briefs, and channel bets. Keep the optimization steady by documenting what worked and what didn’t.

Bottom line: the future of sales blends human insight and machine precision. Assign a dedicated owner for balance, invest in training to preserve authenticity, and ensure visuals align with brand voice. Pose questions, collect feedback, and iterate. whats next is a repeatable loop: learn, apply, measure, and evolve, so everyone benefits from better experiences.

Identify tasks best suited for AI-driven ideation in campaigns

To streamline creative ideation without sacrificing relevance, deploy AI to generate baseline concepts, then guide humans to polish and own the final messaging. If youre strapped for time, AI can draft dozens of variants for each asset, enabling rapid tests and learning; as campaigns evolve, the loop can become a core part of the workflows, helping to discover patterns without exhausting people. It doesnt replace human judgment; AI outputs are a smarter tool to make the team more productive and to support making strategic decisions.

- Headline and copy concept generation: AI drafts 50-200 headline variants per brief across tones and value props; use tests to identify top performing options. Editors pick 5-10 to test next, which cuts down manual drafting time and reduces burnout.

- Blog content angles and outlines: AI proposes angles, hooks, meta topics, and outlines for blog posts, ensuring coverage of diverse perspectives while preserving brand voice.

- Subject lines and email copy: AI generates 20-40 subject lines and multiple body variants per segment; tests reveal which combinations drive open rates and engagement.

- Audience problem-solving framing: AI surfaces angles framed around solving concrete user problems, helping messaging stay relevant across channels and contexts.

- Personalized concept sets for segments: generate tailored variants for different personas or industries; templates get reused and adapted quickly without starting from scratch.

- Downstream asset ideation: propose visual directions, layouts, and micro-copy for landing pages, banners, and video scripts to maintain consistency across downstream assets.

- Testing plans and hypotheses: AI drafts test hypotheses, KPI targets, and measurement plans; run tests to validate and refine, without analyzing data manually in the first pass.

- Workflow integration and governance: embed AI outputs into existing workflows with prompts and guardrails; advanced configurations keep control on the left side while enabling heavy iteration.

- Oversight and evaluation loops: define criteria to evaluate ideas, monitor seen signals, and iterate rapidly with human oversight guiding brand alignment.

- Burnout reduction and capacity planning: automate repetitive ideation tasks to reduce burnout, freeing people for strategic, high-value storytelling and making room for creative experimentation.

Benchmark metrics for evaluating AI-generated vs human-created content

Recommendation: implement a hybrid evaluation protocol that combines measurable automated metrics with human judgments, and run testing in parallel for ai-powered and human-created content. Use a two-tier score: quantitative (0–5) for relevance, factuality, and readability; and qualitative (1–5) for emotionally resonant and brand-aligned messaging. Target an average automated score of 4.0+ and a qualitative score of 4.0+ across 200 items per batch. Calibrate with a humanai baseline to align machine output with real-world expectations and ensure it does not feel like replacement, but rather a tool that takes decision-making to the next level, and optimize for outcomes that affect the audience together with humans.

Measurable metrics cover content quality and impact. Track factual accuracy (error rate under 2%), semantic alignment (BERTScore above 0.75), readability (Flesch-Kincaid level 8–12 for broad audiences), sound brand voice (tone and vocabulary consistency), and message coherence. Measure engagement: time on page, scroll depth, and CTA click-through rate. Include scheduling efficiency: time-to-publish per piece and cadence adherence; log how ai-powered variants affect overall publishing velocity. AI content often lacks domain nuance, so incorporate guardrails that force checks on specialty topics. The scoring table should be transparent so everyone can understand the level of quality and affect content strategy across channels.

Testing protocol emphasizes realism and diversity. Use 250 items per batch across categories such as beverage campaigns and product tutorials, with both long-form articles and microcopy. Randomize presentation order, randomize AI-generated vs human-created content, and collect two sets of ratings from independent panels to improve reliability. Track inter-rater reliability and aim for Cronbach’s alpha above 0.7. Ensure the process molds toward consistent outcomes rather than drifting into a subjective mold, and document how each piece affects scheduling, distribution, and overall decision-making.

Decision-making blends AI and human input. The dashboard presents scores for AI-generated and human-created content side by side, and allows either track to trigger escalation to a human reviewer when risk thresholds are crossed. Working together, teams set guardrails to avoid denials of user value; content choices optimize for impact without denying the value of human insight. Be clear that AI is not a replacement, but a partner in brainstorming, planning, and final polish. Use a humanai benchmark to ensure the system can adapt to nuanced contexts and emotional signals that machines still struggle with.

Practical steps to implement: 1) define measurable metrics and thresholds; 2) run a six-week pilot; 3) build a live dashboard; 4) run regular cross-channel testing; 5) iterate on feedback. Schedule weekly reviews where leadership and content creators review top AI vs human items, and adjust the mold or workflow to keep content aligned. 6) track impact on revenue, engagement, and brand perception. This approach helps everyone understand what level of quality to expect, and how ai-powered tools affect decision-making in real campaigns, including content for beverage brands and beyond. Finally, think about governance: avoid denying the value of human input.

Blending storytelling with data: building hybrid creatives that convert

Start with a concrete rule: pair a tight narrative hook with a rapid data test in a two-week sprint. Draft a 120-second story arc that aligns with a single offer, then validate it with two landing-page variants and measure result, including seconds to first interaction and conversions. Run three micro-tests and iterate based on outcomes within 14 days. Structure the workflow so workshops train teams to apply both craft and analytics, and document lessons in a shared table.

Behind the scenes, map the narrative beats to behavior signals: scroll depth, click paths, time on page, churn risk, and micro-conversions. The subtle adjustments to tone, imagery, and pacing can drive a big result without heavily overhauling assets. When issues arise, address them quickly through testing, not through denial; a clear, transparent testing plan reduces frustration and keeps students and colleagues engaged. If responses stall, it can be frustrating; tests reveal why. If a line coughs, a quick test reveals a better alternative. Love for creativity should balance with data discipline to avoid turning work into a dull routine.

According to Boyer, creativity flourishes where structure supports exploration; align the table of experiments with the creative brief, ensuring every idea has a test and a hypothesis. In practice, use a simple table to capture assumptions: audience signals, narrative hook, asset format, and success metric; review weekly with students and colleagues. As data comes in, current insights should guide decisions, not mute imagination. If you see high churn in a segment, pivot the story angle quickly rather than denying signals. This approach takes a disciplined, repeatable rhythm that teams can own.

| Elemento | Action | Metric | Time frame |

|---|---|---|---|

| Headline narrative | Test hooks and opening lines | CTR, time on page, seconds to first interaction | 14 days |

| Visual asset | Evaluate imagery and color palette | CTR, engagement rate | 14 days |

| CTA copy | Experiment phrasing | Conversions, signups | 14 days |

| Story arc pacing | A/B story beats | Scroll depth, completion rate | 14 days |

| Retention loop | Follow-up narrative email | return rate, churn rate | 28 days |

The hybrid approach yields impressive efficiency gains: unified storytelling and data-driven refinement reduce waste and accelerate wins. It creates a collaborative area where students and professionals share feedback, cutting the time from concept to result by seconds in fast-paced projects. By keeping a balance between love for craft and analytical rigor, teams reduce friction and churn, building a repeatable path to conversion.

Step-by-step setup for an AI-assisted creative workflow

Start with a standardized brief and a reusable template to guide every asset. Place the initial draft on the left side of your workspace, ensuring the real voice stays intact as you feed it to Jasper for rapid ideation. Use this one-page brief to define audience, offer, and a measurable outcome; tie this to a primary KPI to keep campaigns focused and avoid drift.

Step 2: Build a modular creative template for creationhigh-volume outputs: headline, subhead, body, CTA, and visual prompt blocks. Predefine tone, length, and brand guidelines; encode those in prompts so AI can deliver consistent drafts, then throttle through human review. heres how to structure prompts for consistency with jasper and other tools, while preserving brand voice across campaigns.

Step 3: Data and analytics: connect sources (CRM, ad platforms, web analytics). Define where to pull signals and where to deliver assets to channels; set up dashboards that show left-to-right metrics; track downstream effects on conversions; use analytics to quantify the impact of AI-assisted assets on engagement.

Step 4: Toolchain setup: assign jasper to ideation and first drafts, a vision-checker to ensure alignment with customer problems; identify where human editors should intervene; set SLAs for revisions; ensure approvals from marketing and product teams to accelerate bidding decisions and idea iteration. This step is critical to avoid drift and to keep messaging aligned with goals.

Step 5: QA and governance: maintain a personal, authentic tone by injecting human touches; keep a real voice; tag assets with metadata; implement a check for whether messaging could affect downstream outcomes; verify accuracy of claims and data points.

Step 6: Launch and measure: run tight, controlled tests across large, high-volume campaigns; use A/B tests to compare AI-assisted variants vs baseline; track wins in analytics; adjust bidding strategies based on early results; align with salespeople to ensure feedback loops for downstream outcomes. A/B tests show variants that perform better than manual drafts.

Step 7: Optimization and scaling: codify proven patterns into reusable templates; when metrics improve, scale to new channels; use discover loops to surface new formats and creative silhouettes; maintain a personal, mysterious touch to keep audience resonance.

Data quality, governance, and compliance for responsible AI marketing

Audit data sources now and implement automated quality gates that block low-quality or unconsented data from ai-powered models. Create a data catalog with lineage, consent, and freshness tags to drive guardrails across every workflow.

- Data quality and provenance: Build a centralized data catalog with fields for source, last_updated, consent, and usage constraints. Apply validation rules at the left edge of ingestion and across edge connections to reduce off-target outputs and improve authenticity. Use feedback loops to learn and adjust rules as data shifts.

- Governance and workflows: Define roles, approval gates, and change-control for model updates. Map decision points into explicit workflows so teams can act fast when retraining or updating creatives. Thats why you spell out whether data may be used for training and establish retention rules, so teams stay aligned.

- Privacy and consent: Maintain opt-in status for email campaigns, respect do-not-contact preferences, and apply DPIA for AI marketing use. Use pseudonymization for analytics while keeping data usable for learning. If a user doesnt consent to certain processing, block that processing path.

- Processing real-time signals: In processingreal-time mode, set up streaming pipelines that monitor churn drivers and off-target signals, and re-segment or pause campaigns before send. Link outputs back to the catalog to keep data aligned and auditable.

- Authenticity and outputs: Apply attribution and logging to show how an output was generated; require human oversight for creative decisions and mark AI-generated portions to preserve transparency.

- Learning and small tests: Run small pilot cohorts to validate data rules and model prompts; use learnings to tighten quality gates and reduce drift before scaling to larger markets. This helps you build confidence that the system responds thoughtfully to feedback.

- Audits and reporting: Schedule regular compliance checks, maintain immutable logs, and publish concise dashboards for stakeholders. Include data lineage visuals, consent status, and model version history to demonstrate governance.

- Impact and optimization: Track metrics such as churn reduction, engagement lift, and conversions; tie improvements to specific rule changes and model iterations, so you can demonstrate wins on key marketing outcomes.

- Driver-focused governance: Define drivers such as audience attributes and creative variants; limit prompts to policy-compliant content; monitor which drivers deliver the best results and feed insights back to workflows. This keeps campaigns aligned with brand values and privacy rules.

- Anomaly detection and cough signals: Implement anomaly detection to spot irregular spikes; treat a cough in metrics as a signal to halt processing and review data provenance, ensuring quick corrective action.

AI vs Human Creativity – Can Machines Really Replace Marketers?">

AI vs Human Creativity – Can Machines Really Replace Marketers?">