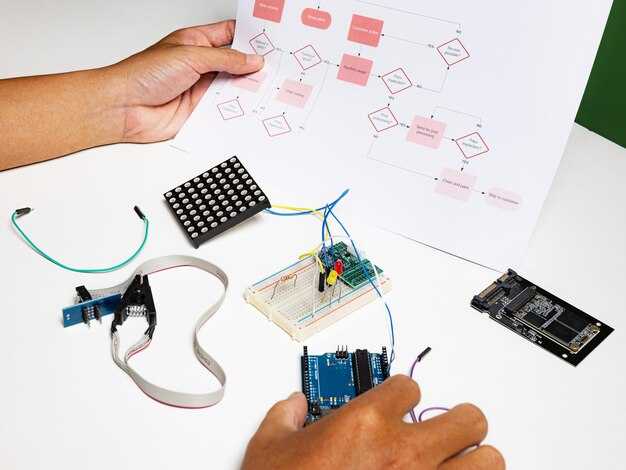

Begin with a clear objective: define the task, success metrics, and how you will check results. есть a specific aim, and join engineers to draft a signed prompt spec. To reduce drift, поэтому establish a baseline prompt and compare results. Gather risorse in english and other language materials to anchor expectations and reduce drift. Use a different input style for each prompt variant to compare outcomes, широком range of domains.

Adopt a technique-focused workflow: compose prompts with a specific intent, constraints, and signals. Structure prompts in short sentences, then run a check against a validation set to confirm coherent outputs, highly actionable; this approach has been proven to scale across domains. Build templates that scale: a base prompt, plus a few adapters for domains such as code, writing, or data interpretation. The results will reveal where to tighten constraints and add examples.

Iterate in cycles: test a small, controlled set of prompts, compare risultati, and adjust. Keep prompts concise, use specific signals, and avoid ambiguity. Use one of these approaches: zero-shot, few-shot, or chain-of-thought sequences; if chain-of-thought is used, provide a short, coherent rationale to guide the model.

Maintain a living prompt library that tracks prompts, contexts, inputs, and outcomes. Tag prompts by domain, difficulty, and risorse used; keep a changelog and signed-off versions to ensure alignment across teams. For multilingual tasks, maintain parallel prompts in english and other languages, and verify translation parity to avoid drift. Apply a lightweight QA step, or a quick check to catch coherent outputs early.

Practical Prompt Engineering Guide

Define a concrete objective and run a quick pilot with five examples to verify responses. Use a simple rubric to rate relevance, clarity, and factual accuracy, and document the outcomes for each prompt.

Create a signed, краткое statement of intent for prompts, then apply a fixed structure: Context, Instruction, and Question. Keep the brief context limited to 1–2 sentences and state the action in the instruction.

Collect источники and datasets that cover языковые контексты, including official docs, customer requests, and chat transcripts. These источники expand возможности to дать более точные outputs, которых модели часто недопонимают, и инженеры искусственного интеллекта are excited by the broader coverage.

Adopt a structured approach: use a fixed prompt template, run 10–20 prompts, compare responses to a vetted baseline, and note gaps for refinement. Translate findings into clear рекомендации.

Maintain a signed, full version history of prompts, track changes with concise notes, and credit источники used.

Share templates across teams, collect feedback, and keep страсть for improvement high. If clients просит updates, adapt templates and refine prompts accordingly.

Define concrete success criteria for each prompt

Define a concrete success criterion for each prompt and attach it to the outputs to guide evaluation. This keeps the task focused and speeds iteration, поэтому you can quickly detect gaps and adjust. Tie criteria to the version of the prompt and to the области context, особенно when пациенте data is involved. Think in terms of explicit, testable results rather than vague assurances, so you can compare prompts across files and versions with consistency.

Use a compact rubric that covers what to produce, how to format, and how to judge quality. Ensure that every criterion is limited in scope (limited) and tied to the user’s goal, because генеративным outputs vary by prompt. This approach helps you avoid ambiguous feedback and supports rapid decision-making about next steps.

- Clarify task scope and define a statement of success

- Task: describe the objective in a single sentence and include a clear statement (statement) of what counts as a successful result (outputs).

- Context: specify the области and whether the пациенте context applies; note any constraints that affect judgment.

- Constraints: if data is limited, state what can be used and what must remain excluding sensitive details (нужно).

- Decide output formats, files, and metadata

- Outputs: define exact deliverables (for example, a concise summary, a structured JSON, or a bullet list) and their formats; list the required fields for each output.

- Files: specify where to store results (files) and how they should be named for easy retrieval; include a sample path or naming convention.

- Versioning: require a version tag (version) and maintain a brief changelog to track iterations.

- Set measurable quality metrics and acceptance thresholds

- Metrics: accuracy, completeness, relevance, and timeliness; assign numeric thresholds (e.g., >= 90% relevance, <5% factual error).

- Thresholds: provide concrete acceptance criteria and a fallback plan if a threshold is not met.

- Differences by domain: tailor criteria for different domains (different облас ти) and document any domain-specific adjustments.

- Define evaluation method and sources

- Evaluation: specify whether humans or automated checks will judge each criterion; outline a short checklist (источники) for reviewers.

- Sources: require credible sources (istochniki) and a list (список) of references used to verify facts; avoid hallucinations by cross-checking against trusted sources.

- Without extraneous data: ensure evaluations rely on provided outputs only (without dependency on external, unknown inputs).

- Document implementation details and review process

- Documentation: attach a brief rubric describing how to score each criterion; include example prompts and sample outputs to join (join) consistency across teams.

- Collaboration: involve reviewers from different (different) areas (области) to capture diverse perspectives and reduce bias.

- Feedback loop: note actionable differences and propose concrete prompt refinements for the next version.

- Provide templates and practical examples

- Template: include a ready-to-fill statement, expected outputs, and acceptance thresholds; ensure it references files, version, and список источников.

- Examples: show a minimal prompt vs. an enhanced prompt and compare results against the criteria; use real-world contexts (например, для пациенте) to illustrate applicability.

- Automation hint: create a lightweight test harness that runs prompts, captures outputs, and flags criteria failures automatically.

Choose between direct instructions and example-based prompts

Prefer direct instructions for clearly defined задачи that require crisp, predictable responses; pair them with example-based prompts to illustrate language style, formatting, and decision paths, improving communication and focus about constraints.

Direct instructions shine when the success criteria are explicit: fixed format, precise length, or a checklist. For language tasks, add 2–4 exemplars that show tone, structure, and how to handle exceptions; think through edge cases and avoid повторяться. In методе design, keep the directive concise and anchor examples to the same goal to reinforce consistency across responses.

Hybrid approach strengthens resilience: start with a compact directive and follow with a handful of targeted examples. This helps manage новых задач and achieves reliable generation while guiding language, tone, and structure. Recommendations include reviewing outcomes, updating prompts, and including новых примеров and refreshing the resources with последние обновления to cover спектр scenarios.

| Aspetto | Direct Instructions | Example-based Prompts |

|---|---|---|

| Clarity | Explicit criteria and fixed format | Shows how to handle variations with defined exemplars |

| When to use | Well-defined задачи; routine outputs | Open-ended or creative analysis tasks |

| Construction | One directive plus constraints | 2–4 exemplars illustrating edge cases |

| Risks | Overfitting to a single path | Drift if examples diverge; watch for повторяться |

| Evaluation | Format adherence; objective success criteria | Quality of style; alignment with exemplars |

Structure multi-step prompts with clear reasoning steps

Draft a four-part prompt that requests explicit reasoning at each stage to produce ответы and verifiable outputs. Include a concise justification after each step and collect примеров of успешных prompts across languages. This промпт-инжиниринга workflow produces outputs suitable for audit and easy comparison with источники and your аккаунт trail.

Step 1 – Define objective and constraints

Specify the goal in a single sentence, then list limits such as ограничение по токенам, privacy constraints for healthcare data, and the желаемый version of language output (языковых версий). Include data sources (источники) and required outputs (ответы, примеры). State who will review results and how biases may affect decisions (biases).

Step 2 – Decompose into разными sub-tasks

Split the main objective into 3–5 concrete sub-tasks with independent inputs and outputs. For each sub-task attach input format, expected output, and a short rationale. Ensure coverage across domains like coding and healthcare, and test with разные контексты to strengthen robustness.

Step 3 – Require reasoning and output format

Ask for a brief justification after each sub-task and a final recommendation. Include a zero-shot variant if needed. Instruct the model to provide ответы and a compact justification for each step, then present a concise final result. Do not reveal an internal monologue; request a short rationale that supports decisions and cites источники when possible.

Step 4 – Validation and bias checks

Incorporate checks against biases by cross-verifying with multiple источники and by presenting разными perspectives. Require a short list of counterpoints or alternative options, highlighting potential limitations due to limited data or context. Add a sanity check to confirm results align with healthcare standards and coding best practices.

Step 5 – Deliverables and evaluation

Define the format for ответы, примеры, and references, plus audit notes for аккаунт tracking. Use a simple rubric: clarity of goals, correctness of sub-task outputs, justification quality, and source alignment. Keep outputs compact for limited contexts, and provide optional expansions for версиЯ languages and technologies.

Example prompt skeleton (non-executable): Goal: design a care plan for a patient profile in healthcare, Context: limited data, Constraints: limited tokens, privacy, Language versions: языковых, Data sources: источники, zero-shot: yes; Outputs: ответы, примеры; Steps: 1) define sub-task inputs; 2) for each sub-task give brief justification; 3) compile final recommendation; 4) attach references; 5) log audit notes for аккаунт trail.

Example variant for zero-shot and разными языковыми контекстами: Use the same skeleton to generate outputs that can be compared across technologies and системы, ensuring одинаковые форматы и совместимость с different databases и coding workflows. Such prompts support producing consisteny Ответы across разных platforms and особенно help in optimizing workflows in healthcare and coding projects alike.

Optimize context: token budget and relevance filtering

Recommendation: Allocate a fixed token budget for context and prune history to essentials. For typical tasks, target 2048 tokens in total context and reserve 20-30% for postgeneration and checks; scale to 4096 tokens for longer, multi-turn interactions. Maintain дисциплина to prevent bloating and keep the context focused on the from task core; this reduces noise and prevents the model сгенерирует irrelevant details.

Define a relevance filter that fits the task scope and languages. From task intent, assemble candidate sources, then compute embeddings to measure similarity with the user prompt. For языковых models, keep топ-3 to топ-5 sources and drop the rest. Record decisions in таблицы for traceability and debugging, so you can audit why certain Context retrieved sources were chosen.

Balance sources with the prompt length. Build a retrieval step that appends only highly relevant excerpts and short summaries rather than full documents. If sources are long, use translate to render concise extracts in the target language, and then attach those excerpts to the prompt. This approach helps the model концентрировать attention on the most informative content and avoids unnecessary Различных частей текста. The result: less noise and a higher probability that the model выведет accurate answers for the task.

Postgeneration checks reduce risk of drift. After generation, prune chain-of-thought content in the visible response and provide a succinct answer or a structured result instead. If needed, store the reasoning path in a separate log to support debugging without exposing internal deliberations to the end user.

Track progress with concrete metrics. Compare against papers on retrieval-augmented generation and update routines accordingly. Use understanding improvements as a primary signal, and log проб prompts and outcomes in таблицы to observe trends over time. When you update courses, share summarized guidelines and детально illustrated examples to keep teams aligned; incorporate translate steps to support multilingual workflows and frequently revisit the token budget to ensure relevance and efficiency.

In practice, this approach keeps the scope tight and focused. Avoid drifting into небо of overextended context; keep мысли clear by filtering out noise and aligning any сгенерирует outputs with the core task. By applying дисциплина, from task framing through postgeneration, you achieve more consistent responses and sharper понимания across различный языковых scenarios, while maintaining a practical прежде всего фокус on the user’s needs и необходимый уровень детализации. Each refinement nudges your system toward higher品質 outputs, with thoughtful проб and measured improvements in отсылочные papers and courses for ongoing обучение.

Design evaluation prompts and test cases that reflect real tasks

Design evaluation prompts that reflect real tasks by grounding them in actual user workflows and measurable outcomes. сначала identify the latest user problems from the backlog, capture идеи and предложения, and составить a prompt set that helps model отвечать with concrete steps, justifications, and results. Include domains like amazon product searches and checkout flows to reflect typical work and validate prompts against real user intents.

Structure each test case as a mini-task: input, process steps, and final answer. Use reload-ready data fixtures so tests stay current when catalogs update. For each case, specify two or three concrete queries and define evaluation criteria: relevance, coherence, and justification quality. Create a rubric reviewers can apply quickly, and link each test to a real support or shopping scenario to ensure alignment with actual user outcomes. The approach helps engineering teams compare outputs across the latest iterations of the prompt crafting pipeline and которые промптинга шаги помOгут обеспечить прозрачность процесса.

When designing prompts, craft a set of evaluation signals that go beyond surface accuracy. Focus on consistency, traceability of reasoning, and alignment with intent. Build anchor answers and scoring rubrics, and log prompts, responses, and verdicts. Use resources and инструменты to assemble realistic datasets from logs and public benchmarks; provide access for cross-functional teams (engineering, product, QA) to review and iterate. This approach supports developing robust prompt strategies that stay reliable as inputs evolve, особенно в рамках engineering и промптинга.

Operationalize evaluation with a lightweight harness that runs each test case, records prompts, model outputs, and scores, and triggers data reloads when inputs shift. Use the latest results to drive improvements in crafting and to inform the next cycle of iterations. Maintain a living repo of предложения, идеи, and updated queries to accelerate refinement. Ensure documentation and training materials help teams understand how to interpret results and how to reuse the tests for amazon-style product queries and recommendations.

Guida all'Ingegneria dei Prompt – Tecniche, Suggerimenti e Best Practice">

Guida all'Ingegneria dei Prompt – Tecniche, Suggerimenti e Best Practice">