Begin with mapping your customer journeys and deploy a drag-and-drop personalization engine to tailor messages at critical points. Embrace a data-driven mindset from day one; these specified moments reveal opportunities to convert intent into action. Run a 2-week pilot across web, email, and push notifications, testing 3 variants per point for a 10–20% uplift in engagement.

Collect first-party signals across web, mobile, and offline touchpoints, then assemble a 360-degree profile that serves as the backbone of your personalization solution. po prostu unify data in a central medium, know what each customer wants next. Set a data transfer target of under 5 minutes for critical segments to keep experiences timely and relevant.

Architect modular playbooks that adapt in real time and run short optimization cycles. As an architect, agility in your team helps changes propagate within hours, not days. These specified rules trigger at key points in the journeys, and each decision should highlight a relevant value prop. Ensure context transfers cleanly across systems so a shopper sees a coherent message whether they browse, email, or chat.

Orchestrate experiences across email, web, push, and in-store with a single control center. Use a drag-and-drop editor to assemble journeys-based sequences that highlight preferred content at the right point. This approach works across channels and opportunities for improvement by measuring open rates, click-throughs, and conversions, and compare against a prosty linia bazowa.

Measure outcomes rigorously and iterate in a dedicated improvement cycle. Define 3 core metrics: engagement rate, time-to-action, and repeat purchase rate, and apply them across all journeys. Use these insights to transfer learnings to the next wave of personalization, and embrace continual improvement so the experience becomes more precise over time.

Step 1: Define target customer segments and personalization goals

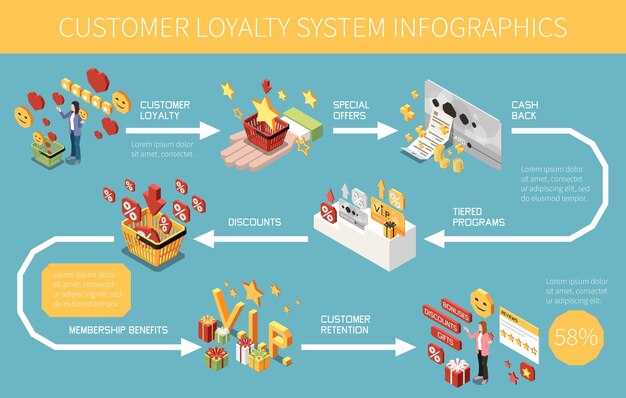

Choose 4–6 target segments based on behavior, value, and context, and set 3 concrete personalization goals you will track. Data shown across tests confirms segmentation drives massive gains in conversions. Define segments such as new users, returning customers, high-value shoppers, seasonal buyers, and at-risk churn cohorts. Each segment receives a tailored offer and a specific touchpoint path to improve relevance.

Link each segment to 3–5 measurable goals: lift conversions by a double-digit percentage, increase engaging moments at key touchpoints, and reduce churn by a meaningful margin. Make goals tangible: target a 12–20% rise in click-through on personalized offers, a 15% lift in add-to-cart rates, and a 5–10% improvement in retention over 90 days. The metric that matters most is sustained engagement and revenue impact.

Choose the primary channel for each segment and map triggers that ignite personalized experiences at a touchpoint: abandoned carts, post-purchase follow-ups, inactivity re-engagement, or important behavioral signals. Ensure delivery happens within minutes of a trigger and aligns with user context. Use underwriting criteria to tailor offers for risk-sensitive products.

Create the production-ready framework: created templates, modular assets, and coding rules that drive personalized messages at scale. Run live pilots with a small sample, measure impact on conversions and engagement, then iterate.

Track health metrics with clear dashboards, maintain privacy controls, and push toward tangible outcomes: revenue lift, churn reduction, and stronger channel performance. Prioritize novelty in content and formats to deliver entertainment value while remaining relevant.

Step 2: Collect, unify, and activate real-time customer data

Define data requirements and set up real-time ingestion across all customer touchpoints. Map sources: website events, mobile app interactions, in-store scans, CRM updates, support tickets, and marketing responses. Build a centralized data estate that stores profiles and signals in a unified layer for easy access.

Unify identities across devices with deterministic matching to create a single customer profile. This reduces duplicates and improves results, enabling more precise activation.

Maintain tight coding standards and document knowledge in a living data dictionary. Focus on modular schemas, clear lineage, and governance. Plan for recruiting data engineers to operate pipelines so your team contribute to a smooth handoff between analytics, product, and marketing. Maintain a therapeutic onboarding experience, pacing data collection and clarifying consent to users.

Latency targets: deliver core signals within 200-500 milliseconds to support true real-time customization. Use streaming technologies (Kafka, Kinesis, or equivalents) to push updates to the profile store and decision layers. Monitor data quality and flag stale signals to prevent drift.

Through seamless streaming, surface cues for triggers: dynamic personalization, customized offers, and relevant content. This approach supports fully customized experiences across web, app, email, and ads. Use a real-time decision engine powered by machine learning to determine the best next action in the moment. This strategy enhances precision and speed, and youll see faster wins.

Model outputs should be interpretable, with confidence scores and guardrails. Track likelihood of conversion, revenue impact, and engagement. Highlights from experiments help justify investments and guide future improvements.

In fintech contexts, watch for overdraft cues and risk signals to tailor messaging without friction. Use data about behavior, repayments, and credit needs to guide offers and education that reduces anxiety for customers and improves outcomes.

Real-time data collection and unification

Collect data from web, mobile, kiosks, and campaigns; apply identity resolution and store updates in a high-availability profile store. Maintain dashboards that display metrics such as match rate, latency, and duplication rate; these highlights guide pipeline tuning and governance.

Activation, measurement, and governance

Activate in real time, delivering personalized content to websites, apps, and channels via APIs and tag integrations. Measure results against benchmarks: click-through rate, conversion rate, and churn reduction. Establish governance with consent, data minimization, and lineage tracking; maintain a simple, auditable model catalog and document coding standards. Youll know exactly which data drives which experiences.

Step 3: Create personalized content and rule-based experiences

Implement a lightweight personalization framework that maps customer signals to content blocks. These signals include profile attributes, recent interactions, and contextual cues. Each rule or model then decides which variant to show in a given view, making experiences truly relevant. Data from pilots is showing a lift in engagement and satisfying outcomes across these touchpoints. This approach has been refined with feedback from product and marketing teams and has been implemented in several pilot programs. Specifically, tailor the content to intent within each channel. Not entirely automated, human checks remain to preserve quality.

Build a modular content library and dynamic blocks that can be assembled per rule. These assets are utilized by rules to present messages, offers, and recommendations. Names and images should be pulled from the customer profile to create a human touch, while localization settings ensure the right language appears. The process sounds straightforward, yet you should maintain strict governance to avoid leakage of PII.

- Define signals and scoring: behavior, context, and preference signals; map to a small set of templates and models to ensure accuracy.

- Assemble and tag content templates: modular assets, tag them for intents; these templates are mapped to rule outcomes.

- Configure the rule engine: implemented with if-then logic or a lightweight decision graph; the engine selects the variant for each view.

- Governance and documentation: documents the rules, owners, and version history; establish review cycles and avoid unsupported claims.

- Measurement and optimization: track duration, views, clicks, and conversions; run A/B tests to tune weights in the models and content selection.

- Scale and attribution: deliver across channels on the platform; maintain consistent tone and branding; credit creators for assets in reporting and dashboards.

There is no guarantee of outcomes, but a disciplined approach reduces risk and increases the odds of success. Start with a pilot, document learnings, and extend to the full platform as you tighten rules and templates.

Step 4: Orchestrate multi-channel journeys and timing

Create a unified timing map for all channels and enforce it across platforms. This keeps experiences consistent and reduces friction as audiences move between touchpoints.

This approach emphasizes scalability: decoupling timing from content and centralizing data enables adding channels without rearchitecting campaigns.

Only rely on real-time signals for the initial touch, then proceed with a structured rhythm to maximize impact across channels. They respond better when sequencing feels natural, and weve seen consistent engagement gains across markets when timing is centralized.

This orchestration involves data from multiple sources: CRM, web analytics, offline POS, and campaign platforms.

They see a smoother path when data is synchronized and timing is consistent across channels. Weve tested across markets and observed lift in engagement when alignment is maintained across multiple touches.

- Define the main path order for each use case and specify delivery windows across channels; keep the sequence predictable so audiences see the right message at the right moment.

- Leverage integration to build a single source of truth for timing and content; this reduces manual efforts and unlocks scalability.

- Segment audiences by behavior, preference, and channel affinity; tailor messages accordingly and reuse assets across multiple touches.

- Set triggers and offsets with precise timing: immediate event-based touch, then 15 minutes for email, 1 hour for push, 24 hours for SMS, and a 72-hour offline engagement if needed.

- Account for platform-specific constraints: character limits, media formats, opt-outs; test across channels to maintain smooth delivery.

- Include offline campaigns in the same orchestration: in-store offers or appointment reminders should align with online cues and data refreshes so teams work with a complete view.

- Monitor delivery and engagement across campaigns; track open rate, click-through rate, conversions, and revenue per recipient to identify where improvements are most effective; adjust the timing matrix accordingly.

- In multi-channel implementations, start with a minimal viable flow and gradually scale as you validate results; the main aim is consistency across channels while keeping efforts focused.

Step 5: Measure impact, run tests, and iterate the experience

Set a baseline KPI for revenue per user and engagement, then run a regular cycle of A/B tests to capture uplifts across mobile and web experiences.

Build a studio-style dashboard that delivers trust through clear understanding of which features drive the improvements, with signals from covered touchpoints across mobile and web. Keep objects of measurement well defined so teams can act on what matters.

Use machine-driven, generative analytics to identify patterns and whether effects hold across segments. Align test strategies with business goals, and keep storytelling as a vehicle to explain the why behind results and guide decisions.

Capture data with high-quality pipelines, ensure coverage from data sources, and utilize a system that can be utilized to attribute uplifts to specific features. This approach drives a repeatable cycle and keeps the team focused on actions that move metrics.

Regular reviews every two weeks help you convert insights into changes that customers feel. Whether you deploy a targeted test or a broader variation, distill findings into concrete actions and update the experience accordingly.

Measurement framework

Track a small set of core metrics, align them with object-level goals, and maintain a single source of truth to boost trust. Use regular sampling across mobile and medium channels to ensure coverage without noise, and document the rationale behind each test to support future iterations.

Implementation details

| Metric | Baseline | Cel | Uplift | Notes |

|---|---|---|---|---|

| Conversion rate | 2.8% | 3.4% | +0.6pp | Mobile-first feature test |

| Avg session duration | 94s | 110s | +16s | Engaging storytelling elements |

| Retention (7 days) | 28% | 32% | +4pp | Generative content boosts engagement |

Veo 3 Behind the Scenes: architecture, data flow, privacy, and deployment

Zacznij od edge-first architecture; it improves responsiveness and reduces cloud processing demand. In Veo 3, deploy on-device pre-processing that filters noise, detects players, and tags media frames for quick play-by-play, then combine this with cloud analytics for deeper insights. This behind-the-scenes approach speeds the first results and supports highly accurate outcomes.

Architecture and Data Flow

The base architecture blends on-device processing, edge gateways, and cloud services. Ingest streams from cameras and wearables, route them via event-driven microservices, and store long-term results in a data lake. Real-time analytics focus on popular games and key plays; smart models identify events, interactions między gracze, i physiological cues such as pace and effort. This approach combines on-device and cloud processing to balance latency with depth of insight. All noise is suppressed before storage, improving data quality for every analyst and editor who uses the system. Highlight moments of play to guide coaching decisions and content creation.

The platform supports knowledge-sharing by exposing clean, annotated data to portals used by teams and partners. For example, portals for na-kd campaigns can reuse event data to tailor content across omnichannel experiences. Every data stream passes through a privacy-aware layer to support adoption of data-sharing policies across regions.

Privacy, Adoption, and Deployment

Privacy is built in: apply data minimization, encryption, and strict access controls. For Veo 3 deployments, default to shorter retention for raw video, tokenize identifiers, and separate personal data from analytics. Establish consent workflows and a clear data-use policy across regions. This emphasis on privacy improves trust and supports faster adoption by clubs and media partners. The deployment model uses blue/green and canary releases to reduce downtime while you test new models and pipelines. Use containerization and orchestration to scale processing as demand rises during tournaments or seasons.

In deployment, use a modular stack: edge agents at venues, a central data platform, and omnichannel portals for editors, coaches, and audience-facing media. The system uses a phased rollout: start with a limited catalog of venues and gradually expand to more events. This yields gradual adoption and avoids disruption. The architecture supports knowledge-sharing by exporting anonymized or consented data into knowledge bases that teams can reuse for new activations, campaigns, or games. By combining these elements, you get continual improvement of the experience for gracze and fans.

How to Create a Personalized Customer Experience in 5 Steps">

How to Create a Personalized Customer Experience in 5 Steps">